Interview with Jean-Louis Giavitto

Jean-Louis Giavitto is a research director at the CNRS. He works mainly on the representation and manipulation of musical objects, both at the level of musical analysis and composition and on stage performance. He explains the key issues raised by the arrival of artificial intelligence in the composer's toolbox...

When and why did IRCAM's research teams begin to take an interest in artificial intelligence as a tool for musical creation?

Researchers at IRCAM have always been interested in this field to some extent. However, for a long time, the so-called symbolic algorithmic approaches (relying on the modeling of phenomena to obtain a result) gave better results than the so-called connectionist approaches (relying on the learning of neural networks), simply because the latter required access to astronomical quantities of data, and to a computing power that we did not have. In addition, the learning algorithms were still not perfected (for example, we did not know how to efficiently learn deep networks, those with many layers of neurons). All this means that the real springtime of artificial intelligence using deep learning neural networks only began in the early 2000s. All of a sudden, these "brutal" learning algorithms made it possible to make a significant leap forward in solving problems for which the symbolic approaches adopted by researchers in the field, with their expert knowledge, had reached a kind of glass ceiling.

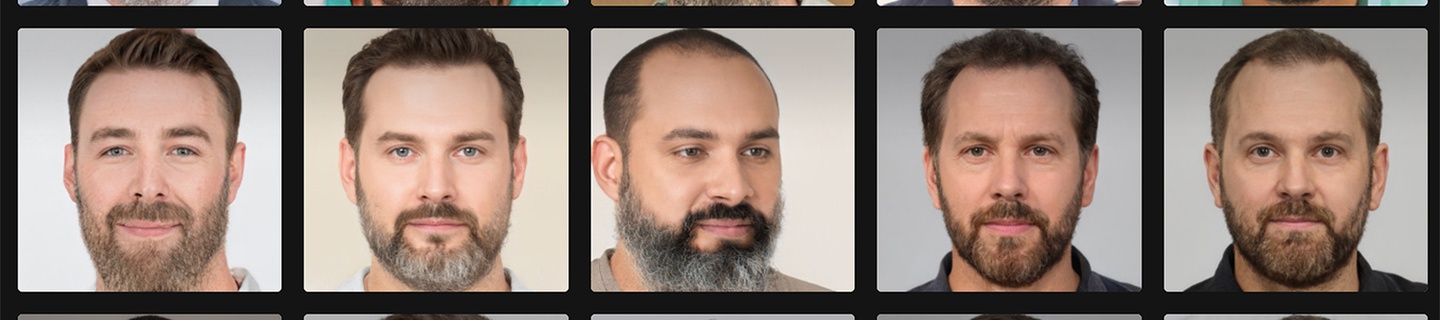

Visages synthétiques produits par des techniques d'apprentissage profond à partir d'un vrai visage generated.photos/anonymiser

Which issues in particular have been addressed and why?

Above all, the phenomena in which complex functions are involved. With the symbolic approach, we try to model the phenomenon by translating experimental observations into scientific laws and by reproducing them with our algorithms. If I take the example of the voice, we know that we have a source, the larynx, and a resonator, the mouth. We know how to model one and the other separately - the problem is linking them. The "coupling function", as it is called, is extremely complex (notably because the sound produced retroacts on this coupling). It can be computed, but programming it in an explicit form is almost unattainable. One could sum it up by saying that the real world can perhaps be described mathematically, but the functions that would allow to do so are ugly, difficult to describe, with many exceptions and special cases.

The main contribution of AI is to free itself from this modeling process ; the machine learns without having to describe everything to it. This means we avoid the most delicate and complex task: the formulation of the model. We just give a lot of examples. In a way, AI does exactly the opposite of what scientists have always tried to do; instead of relying on deciphering the basic rules of the world to recreate its diversity, it learns from the diversity of the world to produce new ones. Watching what happens in the world takes less time today than simulating it.

What tools does this new approach make it possible to develop?

To be honest these tools, or at least their use, was already in existence! For example, we were already doing voice synthesis, but this new approach allows us to do it better. It gives simpler and more powerful tools, easier to access for composers. It can also change the life of sound engineers, with tools for production, signal cleaning, mixing...

You mean that, like Mr. Jourdain, we use AI without knowing it?

Absolutely. AI is embedded in many of today's creative tools.

Do composers and/or musicians have specific requests?

They are many and varied starting with sound synthesis, and particularly voice synthesis (we are currently hosting the artist Judith Deschamps, who aspires to recreate the voice of a castrato, in artistic research residency). But, from what I see, it is above all the object, the concept in itself that challenges them. For the moment, many composers who are interested in AI have mainly tried to use it in their work, more as an element of the work than as a tool per se. This is what Daniele Ghisi did in La Fabrique des monstres, letting us hear the machine learning, with a more and more structured musical discourse emerging at the end. What interests him is more the learning process itself than the final result. The same goes for Alexander Schubert in his work Anima.

What are the fantasies, what are the realities?

Some composers worry about the possibility of automatic music production while others, paradoxically, seek it! I think this is a red herring, either way. Today, there are already people who produce music by the mile, mainly sound illustration. AI can, or will soon be able to, do this and also produce music to accompany videos, following, for example, the dramatic arc suggested by the image. But I believe that AI is currently unable to produce truly experimental music. Simply because experimental music is less defined, since it is not governed by clearly established principles (like tonal harmony).

Some composers worry about the possibility of automatic music production while others, paradoxically, seek it! I think this is a red herring, either way. Today, there are already people who produce music by the mile, mainly sound illustration. AI can, or will soon be able to, do this and also produce music to accompany videos, following, for example, the dramatic arc suggested by the image. But I believe that AI is currently unable to produce truly experimental music. Simply because experimental music is less defined, since it is not governed by clearly established principles (like tonal harmony).

Moreover, this raises the crucial question of data: musical logic has, for a century, become more and more multiplied and individualized. Each composer develops his own, different from that of his neighbor. This means a lot of worlds to go through for the AI and less data from each of them. The AI will therefore have a hard time getting into the logic at work, and what it will produce will be close to random. In short, music can be produced in this way, but it will not necessarily be interesting and will only explore new territories by accident. For a composer, as for a listener, it doesn't matter if a machine composes music like Bach.

Photo: Convergence by Alexander Schubert © Alexander Schubert

What do you think are the risks of such technologies?

The same as any technology: the standardizing effect. By making artists' lives easier, technologies tend to harmonize aesthetics; a tendency that is magnified by the very nature of deep learning. But is this normative danger stronger than that presented by the media machine, the public gaze, or globalization? Having access to all the music in the world does not help to produce new music.

On the contrary, it requires composers to follow a unique path, a form of experimentation, deviations that only make sense in the context of the history and the landscape in which they are embedded. Conversely, this can be an asset, and AI can be used to do just that: to give voice to an emerging norm. The machine brings both an exteriority (it is not a human who composed this) and a focus on our idiosyncrasies (learning captures what is recurrent in a corpus). In this, the machine will undoubtedly "move the lines": what are the questions and issues surrounding composition for a human when a machine can also compose?

What are the avenues of research around these systems at IRCAM?

Many. In the field of analysis-synthesis artificial intelligence systems, even in their simplest form, have changed the game when it comes to cleaning voices, separating sound sources, automating mixing, indexing and characterizing vast catalogs. In the same vein, artificial intelligence can help us determine the best parameters for a given synthesizer to approach a target sound. The same goes for orchestration. We can even teach the computer how to orchestrate a piano part from a given corpus (corresponding to a repertoire or a composer...) and thus orchestrate, by imitation and without ever making the underlying rules explicit, a piano discourse.

Do you see other potential horizons to explore?

I have little feedback from musicians or performers, but many tools are potentially developable, like "Autotune" for instruments, facilitating instrumental gesture and control. There have also been great advances in the field of improvising agents (the machine improvises with human partners). With these systems, the role of the computer goes beyond that of a simple tool to become a musical companion. By using improvising agents for his performance Music of Choices, the composer Alexandros Markeas wanted to create music in a different way, which led him to review the form of the concert and to redistribute the roles between the musician, the listeners and the machine. These improvising agents work from a "memory", a library of sounds that can be enriched as one wishes. For the moment, it is the musicians who choose what they put in it, and the machine then feeds itself with everything that happens on stage during the performance. But what if this memory was never emptied? If this library could pass from one artist to another, feeding itself from concert to concert, like a musician who would never forget anything. By becoming part of a history, this library would become a true creative companion, distinguishing itself and differentiating itself from other libraries.

Music of Choices, performance by Alexandros Markeas, Festival ManiFeste-2022 © IRCAM-Centre Pompidou, photo: Quentin Chevrier

Music of Choices, performance by Alexandros Markeas, Festival ManiFeste-2022 © IRCAM-Centre Pompidou, photo: Quentin Chevrier

It is of course a thought experiment but, each one appropriating the tools in his own way, by assigning them his own operative structures, the companion evolves: how then to welcome his proposals? The challenge for the composers is to imagine new ways of working with the machine, to "break the rules in the very act of playing".

Beyond their applications, what are the possibilities for improving these learning systems in the context of creative assistance?

This mainly involves improving the learning algorithms (by refining their settings, or, more broadly, via the choice of network architecture), but also paying particular attention to the corpus from which the system learns, which also has a significant influence on the results. One of the major challenges is to break the "black box" that AI represents today: by knowing how it works, we are better able to program and control it. This seems both counterintuitive and ironic: now we should adopt a symbolic approach to this tool which has freed us from a symbolic approach of phenomena. This is a major question: must intelligibility require a symbolic approach?

Moreover, it is the same for art; is art intelligible through the symbolic? Or does it speak directly to our senses? Shouldn't art be a display, more than a demonstration, constantly dissected and analyzed? Would artificial intelligence be more "artistic" than us in this respect, since it does without understanding? We can see that artificial intelligence forces us to reconsider artistic, philosophical, anthropological, and other ancient problems from another point of view.

Interview by Jérémie Szpirglas